If you are GPU-Poor, then you should be paying attention to synthetic data & small LLMs

What's going on with small LLMs and why should we care about it?

The rapid advancement of Large Language Models (LLMs) is setting an unparalleled pace, compelling the global AI community to innovate or risk obsolescence. This essay posits that the key to maintaining relevance for non-AI labs lies in concentrating efforts on developing specialized and powerful small LLMs. These LLMs will bolster the open-source community and individual developers in the era of mega models.

What is the state of frontier AI?

Consider Anthropic's ambitious publicly known plans. The company is planning on training a new frontier model which will require on the order of 10^25 FLOPs, or floating point operations — several orders of magnitude larger than even the biggest models today.

“These models could begin to automate large portions of the economy,” their latest pitch deck reads. “We believe that companies that train the best 2025/26 models will be too far ahead for anyone to catch up in subsequent cycles.

Likewise, OpenAI likely has similar plans, and recent rumors hint that they are still making progress. These rumors suggest that OpenAI possesses a powerful new model code-named "Arrakis," which was reportedly trained on a dataset that was half synthetic and surpasses GPT-4 in capabilities, performing close to human experts across various fields.

What should Open Source do?

Addressing the imperative question—what should be the open source's course of action?—this essay advocates for a strategic reallocation of resources towards the creation of exceptionally powerful small models.

Emerging as a pivotal force, open-source LLMs showcase the potential to bridge the technological divide. Still nascent research is revealing promising avenues, suggesting that the boundaries of what we deemed possible to run on GPU-poor setups are expandable.

To delve into this, we begin by exploring an overarching concept—knowledge distillation. First formalized by Geoffrey Hinton et al, knowledge distillation is the technique of creating simpler models from more intricate ones, focusing on condensing the dataset while preserving its core information. Synthetic data, a counterpart to this, is fabricated data that can be used to train other models. In the realm of LLMs, one application of distillation through synthetic data involves creating synthetic textbooks, a concept which was leveraged recently by Phi-1.5, that is shown below. Let’s examine this and some of the other intriguing recent advances in the field.

Start by reading the literature -

Given the pace set by the rapid advancements and the ambitious endeavors of entities like Anthropic and OpenAI, immersing ourselves in the latest literature is not just imperative but foundational. This exploration goes beyond a scholarly pursuit; it is a critical step to uncover the strategies and responses the AI community is employing to navigate the challenges and seize the opportunities presented by the evolution of LLMs. Below, a timeline of recent advances unfolds, shedding light on innovative methodologies and breakthroughs, particularly emphasizing the pivotal role of synthetic data in crafting increasingly powerful small models.

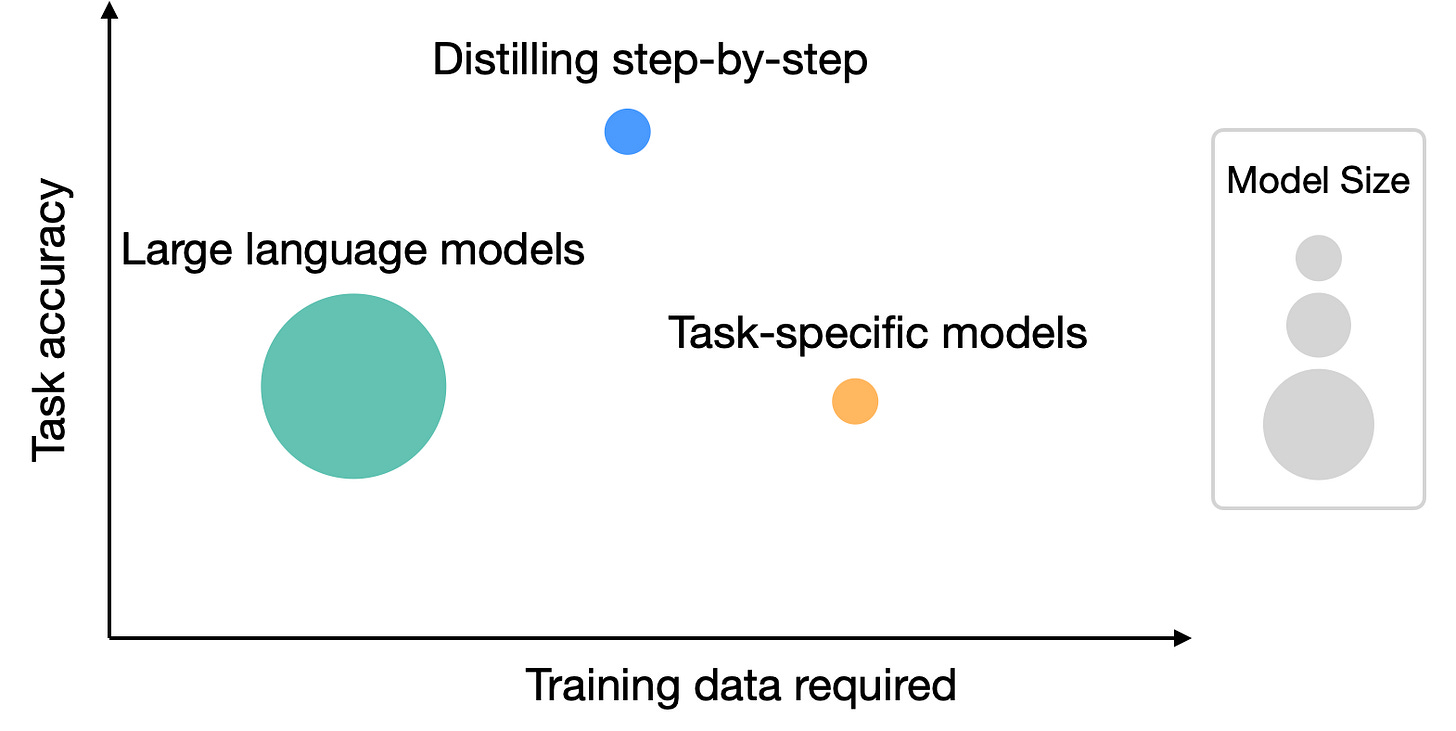

Above - Google recently demonstrated a more than 700x model size reduction with much less training data required by standard approaches to achieve robust step-by-step reasoning.Self-Instruct [Dec 2022]

utilizes self-generated instructions for fine-tuning, achieving a 33% improvement and outperforming existing datasets, highlighting the potential of annotation-free methods in enhancing language models.

Unnatural Instructions [Dec 2022]

leverages a large, virtually human labor-free dataset of creative instructions, showcasing model-generated data as a cost-effective and competitive alternative to traditional training methods.

TinyStories [May 2023]

introduces a synthetic dataset of short stories, enabling the development of smaller, yet coherent language models, offering insights into language capabilities in low-resource or specialized domains

Orca [June 2023]

learns from the complex explanation traces of GPT-4, surpassing conventional models in zero-shot reasoning benchmarks and indicating the promise of learning from step-by-step explanations.

Distilling Step-by-Step [July 2023]

efficiently trains smaller models using LLM rationales and less data, with notable success such as a finetuned 770M T5 model outperforming a 540B PaLM model, emphasizing the efficacy of the approach

Code Llama [Aug 2023]

releases a family of high-performing language models for code, outperforming other models on benchmarks, with observed improvements even on a small set of high-quality coding data, underlining the potential of specialized models. Further, demonstrates value of unnatural instructions on unreleased model.

Textbooks Are All You Need II [Sep 2023]

trained almost entirely on synthetic data, exhibits performance comparable to models 5x larger, emphasizing the surprising capabilities of smaller LLMs developed with synthetic data.

What does this all tell us?

The accelerating endeavors of major labs, such as Anthropic and OpenAI, are crafting a future where the colossal power of models like "Arrakis" could potentially reshape economies.

Within this dynamic landscape, and with the context of the results above, it appears to me that we should pivot towards developing specialized and potent small LLMs. The development of small models present a promising avenue to elevate global standards, offering diverse applications and mitigating the risks associated with larger models by decentralizing intelligence. Moreover, it seems that we are poised now to make great advancements in these efforts by distilling larger models.

This is why I am now endeavoring to replicate the results of Phi-1.5. At first I intended to write an article summarizing my learnings over the past week, but as I dug in deeper I quickly realized that providing motivation first made more sense.

What might Open Source do?

It is imperative now for the open-source community to remain competitive and forward-looking. I don’t speak for all of the open source community, but I can outline what I am planning on doing as someone who wants to contribute to the space:

I’m trying to understand and solve the challenges around generating large synthetic datasets.

I’m looking into the impact of various choices in dataset generation (like RAG)

I’m moving quickly to quantitatively understand how novel (e.g. synthetic and other) data impacts the training pre-training and fine-tuning models.

I’m trying to make plans now on how to scale up these efforts later.

The initial evidence suggests that synthetic data could be the gateway to constructing models with capabilities akin to GPT-4, but with significantly fewer resources. However, there is still a lot here for us to understand. This endeavor is interconnected with other great open source projects, such as OS Skunkworks' research into Mixture of Experts (MoE), Alignment Lab’s work on Open-Orca, and Nous Research’s work on Hermes.

Soon I will do a full write-up of what I learned thus far in attempting to replicate Phi-1.5. At this moment, what I can share is a large synthetic textbook dataset online here and a public repository here.

Let’s Collaborate:

I have had the good fortune to come across a group of a few other smart and motivated people who are working on similar things - if you would like to join this informal collaboration then please reach out directly or join the Discord here.

We need people who are interested in building the datasets, researching fine-tuning and pre-training, and contributing to the software.